What Freya users actually want

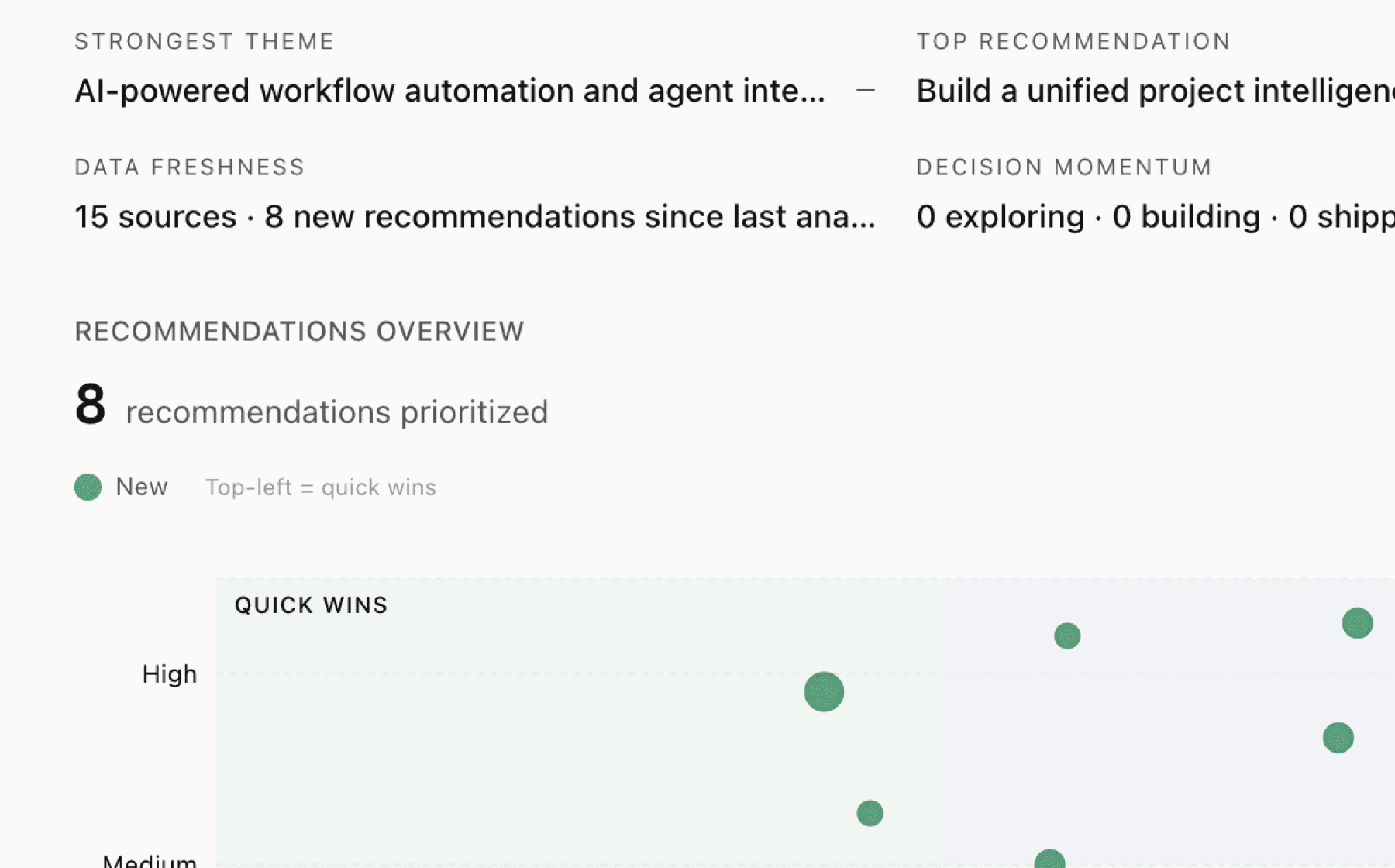

Mimir analyzed 5 public sources — app reviews, Reddit threads, forum posts — and surfaced 17 patterns with 6 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Build a compliance-first conversation designer that validates every utterance against regulatory requirements in real time

High impact · Large effort

Rationale

Regulatory compliance creates the highest friction point for enterprise adoption in banking, healthcare, and insurance. Every hesitation, inflection, and word choice must be defensible under audit, but current voice AI tools force teams to retrofit compliance after designing natural conversations. This creates a false choice between regulatory safety and customer experience.

A visual conversation designer that validates each phrase against industry-specific rules as you build would eliminate compliance anxiety and accelerate deployment. The tool should flag risky language instantly and suggest compliant alternatives that maintain conversational flow. This addresses the core tension between natural-sounding interactions and regulatory guardrails.

With domain-tuned models already delivering 30% better accuracy in insurance contexts, embedding compliance validation into the design workflow would transform a blocker into a competitive advantage. Enterprise customers could deploy faster, audit with confidence, and scale without regulatory risk.

Projected impact

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

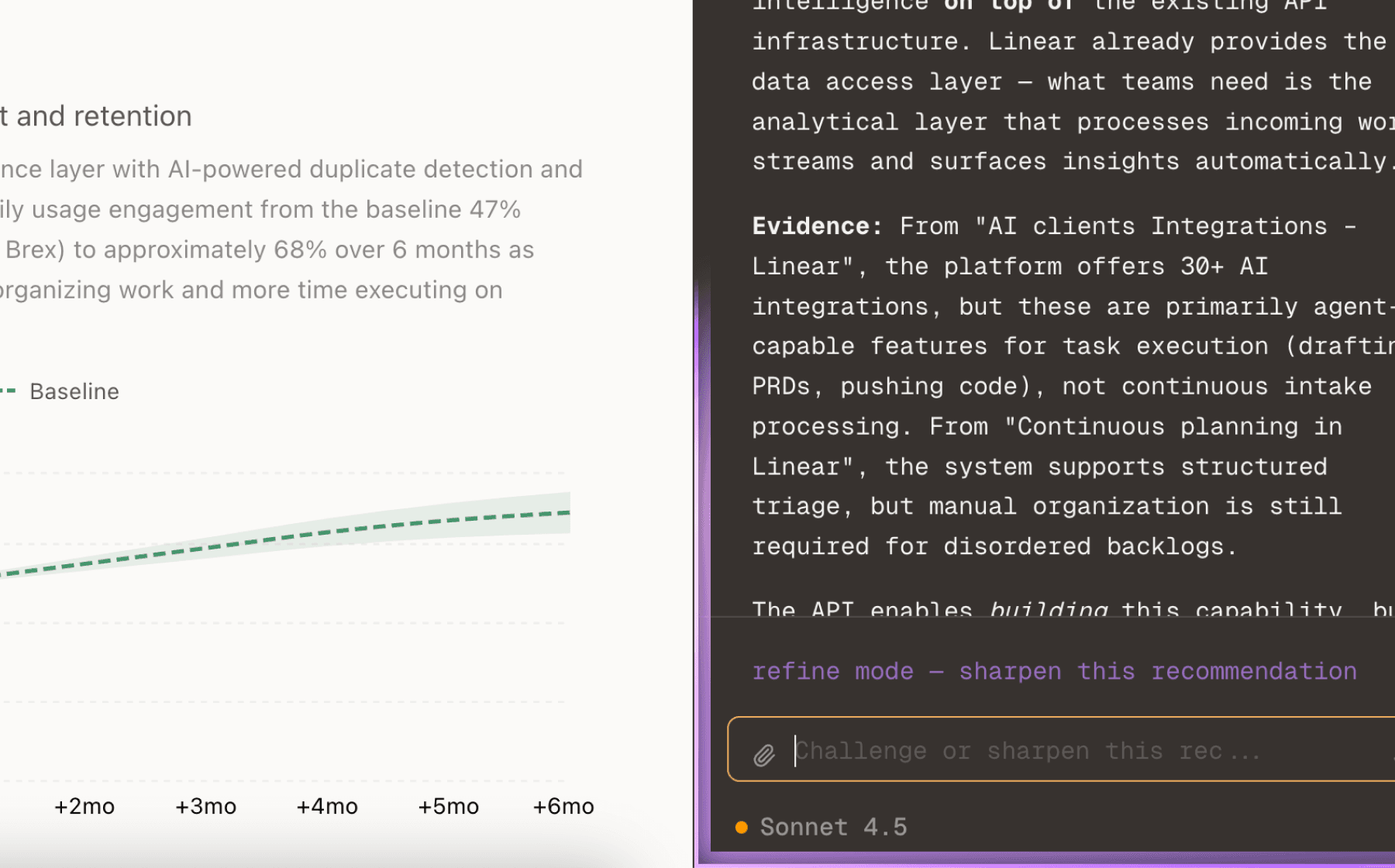

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

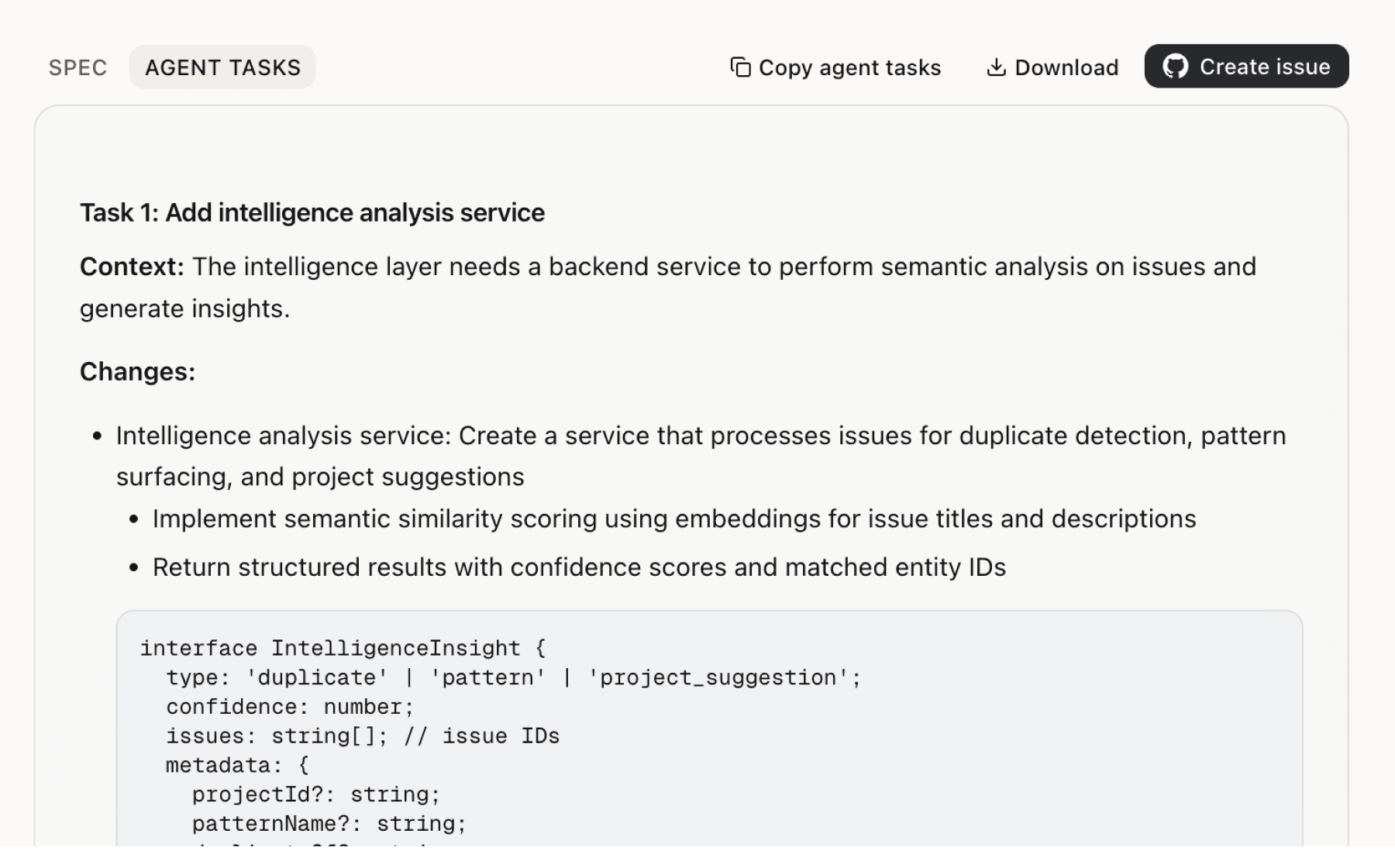

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

5 additional recommendations generated from the same analysis

Enterprise customers in regulated industries express critical concerns about data privacy, but the current approach creates a trust gap. While Freya commits to zero-data retention for customer inputs, the platform also uses de-identified and aggregated data including voice recordings and transcripts for model improvement. For teams handling sensitive voice biometrics in banking and healthcare, this distinction may not feel sufficient.

Scaling to thousands of simultaneous calls while maintaining conversation quality represents a fundamental technical challenge. Product managers and engineering leads need confidence that nuance, context, and emotion remain consistent at scale, but current visibility is limited. The January 2025 prototype struggled through its first real customer call, indicating quality assurance remains an active concern.

Domain-tuned AI training delivers 30% better accuracy than generic models in insurance contexts, but this advantage only matters if customers can access industry-specific knowledge without building it themselves. Product managers need pre-built conversation flows, terminology libraries, and workflow integrations for common use cases in regulated industries.

Product managers explicitly request lead qualification with smart prospect scoring and automated renewal outreach at optimal timing. These capabilities directly address revenue acquisition and retention, the two primary business drivers for enterprise customers adopting voice AI.

Enterprise customers require granular control over who can access voice AI systems, especially when handling sensitive customer data. Current account security places responsibility on users to maintain credentials and not share access, but this model doesn't align with enterprise IAM practices.

Insights

Themes and patterns synthesized from customer feedback

Freya is at an early stage of product development (joined Y Combinator S25 after one year of R&D, initial prototype struggled in January 2025). While the company has achieved key compliance certifications, the evidence suggests ongoing challenges in real-world deployment and conversation quality.

“Initial prototype struggled through first real customer call in January 2025, indicating early-stage product maturity.”

While Freya commits to zero-data retention for customer inputs, it does use de-identified and aggregated customer data—including voice recordings and transcripts—to improve AI models. This creates an operational tension: enterprises may question whether de-identification is sufficient, especially when biometric data (voiceprints) is involved.

“Freya does not use customer data, inputs, or work products for model training—only publicly available data for validation.”

Freya requires users to maintain account security and not share credentials, with the company retaining rights to suspend accounts for violations. For regulated enterprise customers, this creates shared responsibility for security but potential friction around account provisioning and access control.

“Website requires account creation for some or all Services; users must not share account credentials and are responsible for account security.”

Feature request for voice verification plus SMS two-factor authentication to secure access to customer data. This addresses regulatory requirements for authentication in financial and healthcare contexts.

“Voice verification plus SMS two-factor authentication for secure customer data access.”

Insurance-focused feature request for automating quote intake to gather customer information, assess risk, and deliver competitive quotes within conversation. This is a high-value use case but appears lower priority than core compliance and scaling challenges.

“Quote intake automation to gather customer info, assess risk, and deliver competitive quotes in conversation.”

Enterprise customers in financial and healthcare may be subject to longer mandatory data retention periods under applicable regulations. Freya acknowledges this but the operational complexity of managing variable retention periods across customer segments remains a configuration challenge.

“For enterprise customers in regulated industries, Freya may be subject to longer data retention periods under applicable laws, such as financial or healthcare regulations.”

Enterprise customers using Freya achieve measurable cost reduction through automation of contact center and customer service operations. This is a tracked metric indicating clear business value delivery.

“Cost reduction achieved by insurance companies using Freya.”

Freya tracks service accuracy rate for call handling as a core performance metric. This suggests the company is focused on measuring real-world quality but details on baseline accuracy or improvement targets are not provided.

“Service accuracy rate for call handling.”

Freya does not store full payment card details; payment is processed by a third-party provider. This reduces PCI compliance burden but introduces operational dependency on external payment services.

“Freya does not store full payment card details; payment processed by third-party provider.”

Product managers and engineering leads need to deploy voice AI across thousands of simultaneous calls while preserving the consistency, nuance, and quality of individual customer interactions. Early prototype struggles indicate this remains a technical challenge requiring careful attention to context, emotion, and conversation flow at scale.

“Platform capability to deploy voice AI at instant scale while maintaining conversation quality and consistency across high call volumes.”

Generic AI models significantly underperform in regulated industries; domain-tuned training on insurance terminology, claims workflows, and policy language delivers 30% better accuracy. This suggests that enterprise adoption depends on deep customization rather than one-size-fits-all approaches.

“Domain-tuned AI training on insurance terminology and workflows delivers 30% better accuracy than generic AI.”

Building empathetic, natural-sounding voice AI requires careful attention to word choice and linguistic precision, not just tone and inflection. This finding suggests that linguistic fidelity—the ability to use the right words in sensitive moments—is a critical factor in customer perception and trust.

“Natural-sounding voice AI requires precise word choice in sensitive moments, not just tone of voice, to convey empathy effectively.”

Product managers are requesting automated lead qualification with smart prospect scoring and instant routing, as well as automated renewal outreach at optimal timing to prevent churn. These features address two critical business drivers: revenue acquisition and retention.

“Lead qualification with smart prospect scoring and instant routing to sales team.”

Freya handles inbound and outbound calls across support, service, and sales in dozens of languages with 24/7 availability, addressing the needs of enterprises with global customer bases. This capability is present but appears to be a table-stakes feature rather than a differentiator.

“Freya handles inbound and outbound calls across support, service, sales in dozens of languages, 24/7.”

Enterprises require voice AI solutions that deploy in days rather than months with seamless integration to existing CRM, AMS, payment systems, and custom applications. This appears to be a key competitive advantage for Freya.

“Deploy in days, not months with seamless integration to CRM, AMS, payment systems, and custom applications.”

Enterprise customers in banking, healthcare, and insurance require strict data handling practices. Freya addresses this through zero-data retention policies, no use of customer data for model training, complete deletion capabilities, and SOC 2/GDPR compliance, but the complexity of managing sensitive voice biometrics and transcripts across regulated industries remains a significant operational concern.

“Freya does not use customer data, inputs, or work products for model training—only publicly available data for validation.”

Enterprise customers in regulated industries face complex compliance requirements where every aspect of AI voice—word choice, tone, hesitations, and inflections—must be justified and defensible under regulatory audit. This creates significant friction in deploying natural-sounding voice AI while maintaining compliance guardrails.

“Every hesitation and pitch inflection in AI voice must be justified and defensible under regulatory audit, creating complex compliance burden.”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.