What Omnara users actually want

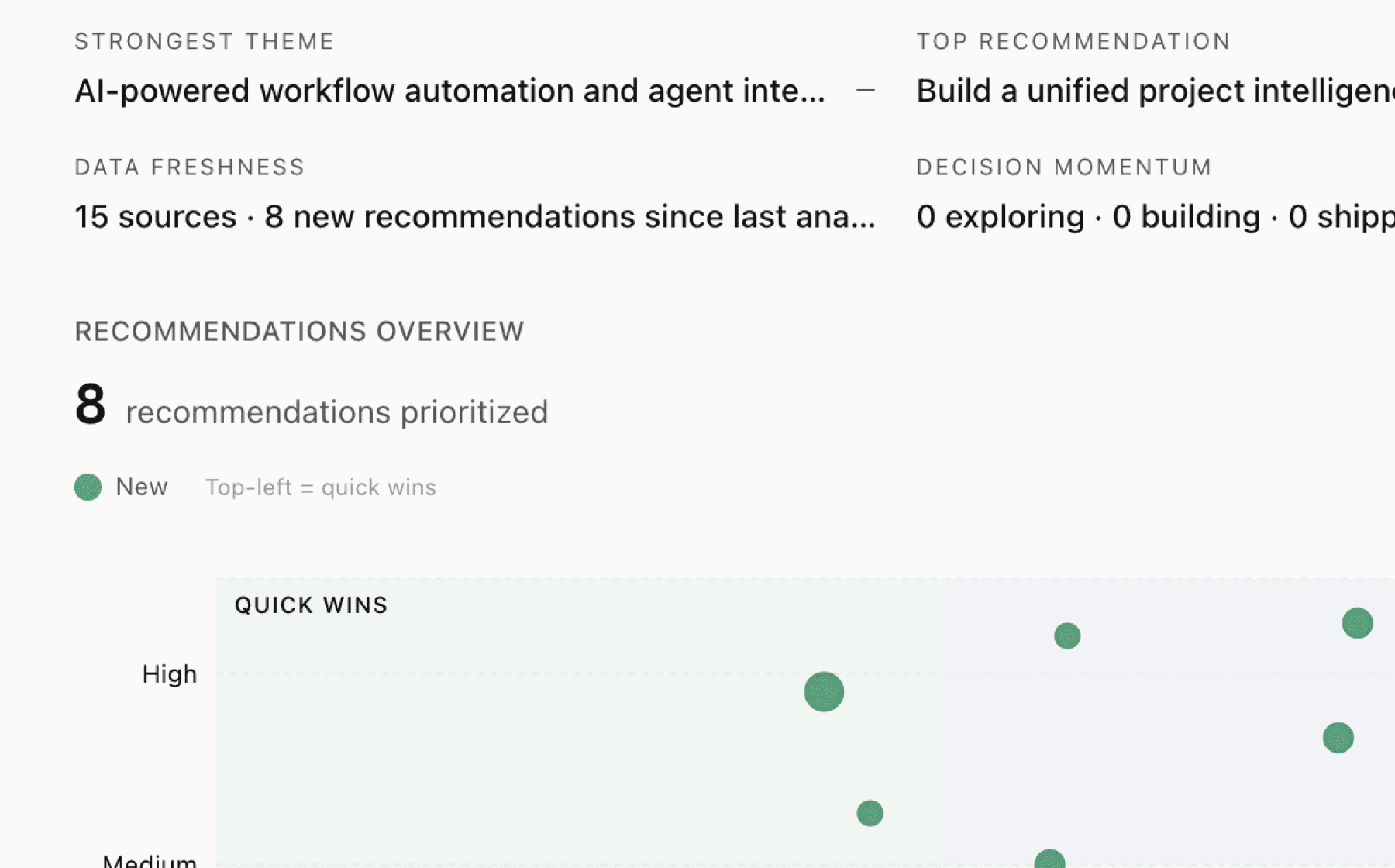

Mimir analyzed 8 public sources — app reviews, Reddit threads, forum posts — and surfaced 12 patterns with 7 actionable recommendations.

This is a preview. Mimir does this with your customer interviews, support tickets, and analytics in under 60 seconds.

Top recommendation

AI-generated, ranked by impact and evidence strength

Build seamless local-to-cloud agent handoff with automatic session migration

High impact · Large effort

Rationale

The most urgent technical gap blocking widespread adoption is the inability to run agents on local machines while maintaining remote accessibility. Developers need agents that understand their specific codebase and environment setup, but current tools force a choice between local-only execution (desk-bound) or cloud agents that lack context. 6,000+ users are already adopting mobile-first workflows, signaling strong demand for this capability.

This represents the core architectural moat for Omnara. Solving local-cloud hybrid workflow puts the product in a defensible position that competitors haven't addressed. The technical challenge is real—seamless offline-to-cloud transition with session sync requires sophisticated state management—but it directly unlocks the primary value proposition of coding away from the desk.

Prioritize this over incremental features because it removes the fundamental blocker preventing users from fully adopting the mobile workflow. Without reliable handoff, users remain tethered to their desk during critical operations, undermining the entire product premise. Success here drives retention and word-of-mouth among the target audience of engineering leads who evaluate tools based on technical sophistication.

Projected impact

The full product behind this analysis

Mimir doesn't just analyze — it's a complete product management workflow from feedback to shipped feature.

Evidence-backed insights

Every insight traces back to real customer signals. No hunches, no guesses.

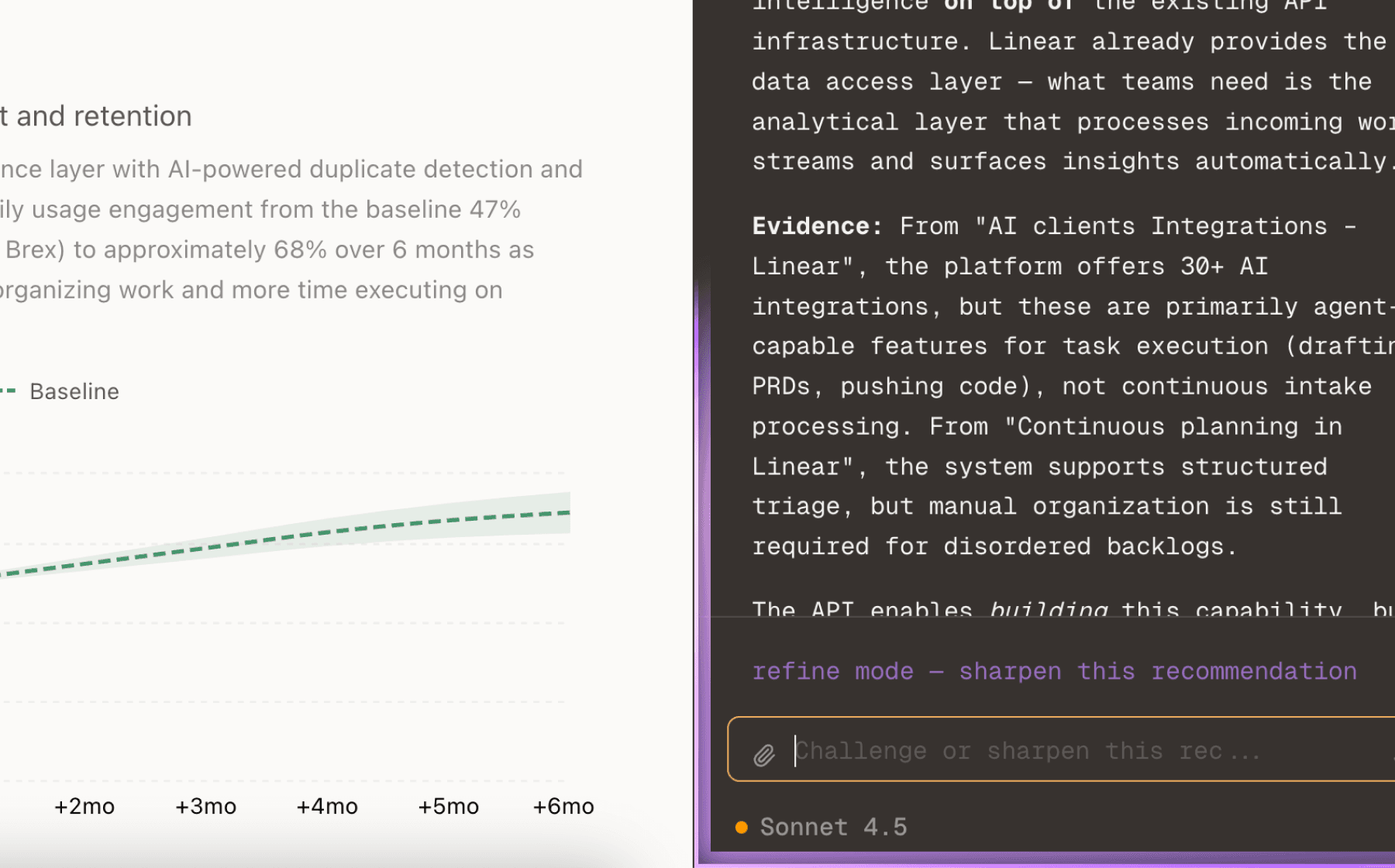

Chat with your data

Ask follow-up questions, refine recommendations, and capture business context through natural conversation.

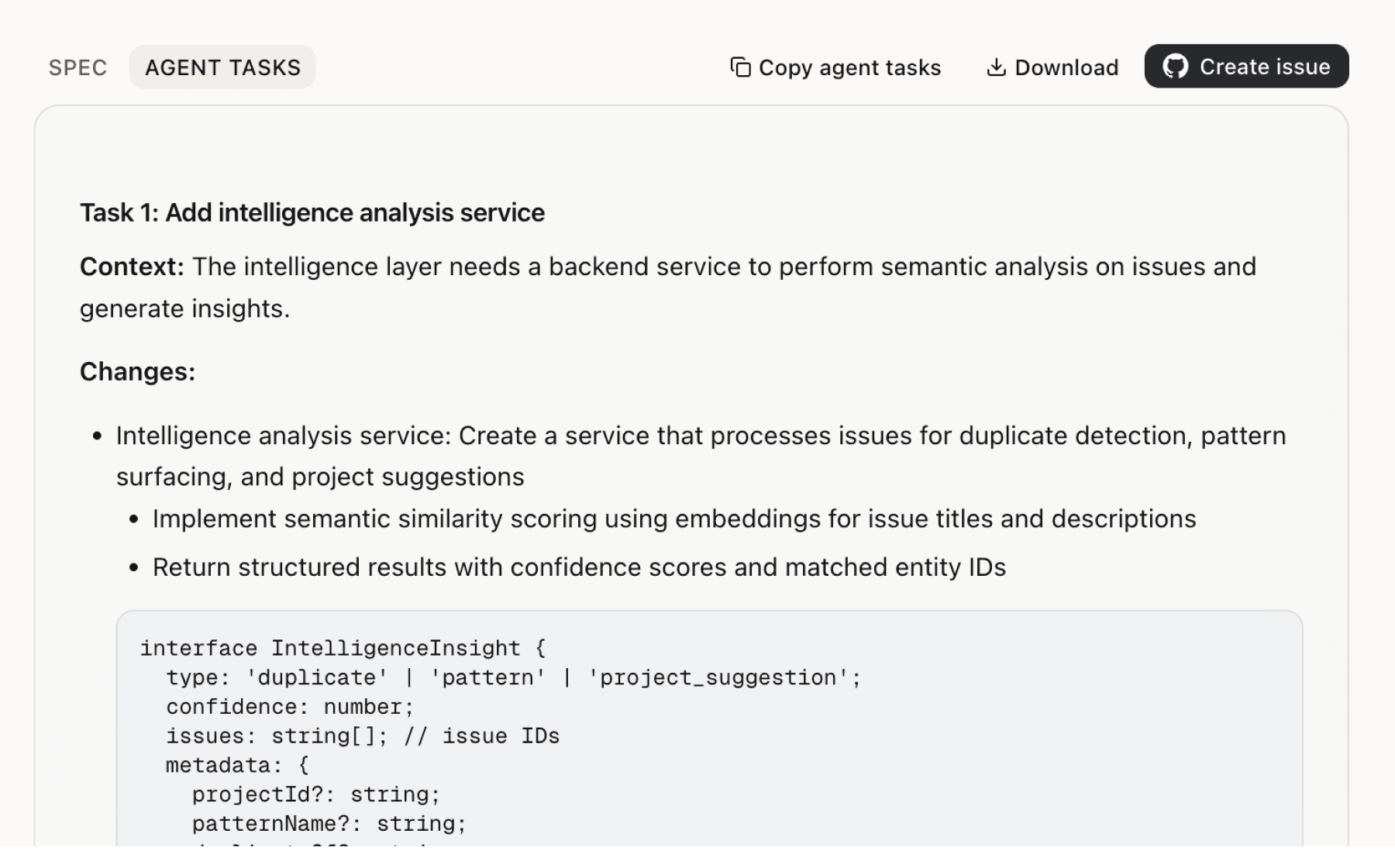

Specs your agents can ship

Go from insight to implementation spec to code-ready tasks in one click.

This analysis used public data only. Imagine what Mimir finds with your customer interviews and product analytics.

Try with your dataMore recommendations

6 additional recommendations generated from the same analysis

Voice input is not just a convenience feature—it fundamentally changes how developers express complex architectural ideas and iterate on prompts. Users report that talking is faster than typing for articulating ideas and exploring directions, and it maintains coding flow without breaking context. This suggests voice should be the primary interaction model, not an alternative input method.

Omnara collects sensitive data including conversation history, message content, and device information, while explicitly disclaiming liability for breaches and security incidents. This creates material risk for enterprise adoption—the fastest path to increasing ARPU and proving the Pro tier business model. Engineering leads and founders evaluating tools for their teams cannot accept broad liability waivers when the product handles their codebase.

Multiple concurrent agents editing the same codebase cause conflicts that real engineering teams avoid through isolation. Current workarounds (worktrees, directory clones, containers) solve the technical problem but increase context-switching burden on users. Managing multiple agents has a ceiling effect—the force multiplier benefit diminishes as coordination overhead grows.

The term async agent has dozens of conflicting definitions across the industry, ranging from long-running tasks to cloud deployment to event-triggered execution. This semantic confusion undermines thought leadership positioning and makes it harder for potential users to understand what Omnara does differently. The blog attempts to establish a precise definition (agent managing other agents concurrently) but lacks the distribution to set industry standards.

Omnara disclaims liability for AI response accuracy, appropriateness, or suitability, but the product's core function is delegating coding work to AI agents. This creates tension between user expectations (agents produce correct code) and product guarantees (no warranty of accuracy). For individual developers, this is acceptable risk, but for enterprise teams, incorrect agent output can propagate through codebases and cause production issues.

The pricing model positions Pro tier as the core business engine, but no revenue metrics are disclosed, making it unclear whether conversion rates and ARPU sustain growth. With 6,000+ users and 2 million messages sent, there is enough volume to analyze conversion behavior, but without instrumentation, the team is flying blind on monetization effectiveness.

Insights

Themes and patterns synthesized from customer feedback

Early user feedback shows genuine enthusiasm and adoption signals, with users comparing Omnara's reception to the famous Dropbox HN comment that presaged strong market uptake. Multiple users report high satisfaction with current functionality ("Enjoying your app so much now that it works"), suggesting product-market fit may be stabilizing after initial refinement.

“User compared Omnara's reception positively to the famous Dropbox HN comment, suggesting strong product-market fit signals and viral potential”

Omnara publishes engineering deep dives and conceptual blog posts (e.g., 'Beyond the Desk', 'What Is an Async Agent, Really?') positioning the company as thought leader in agent programming paradigms and async architecture. This content strategy builds credibility with target users (engineering leads, founders) but effectiveness depends on audience reach and citation influence.

“Omnara publishes product updates and engineering deep dives as part of their content strategy”

Omnara disclaims liability for AI response accuracy, appropriateness, or suitability, and provides no warranty of error-free performance or freedom from viruses. This broad disclaimer combined with the product's core function (delegating coding work to AI agents) creates potential friction between user expectations and actual product guarantees, particularly when agents produce incorrect or problematic code.

“Company disclaims liability for AI response accuracy, appropriateness, or suitability for user purposes”

At least one user encountered a 404 error when accessing the Omnara website, indicating either broken links or outdated page references. While the 404 page includes helpful navigation links, this friction point during the critical onboarding moment could impact conversion from awareness to trial.

“User encountered a 404 error page, indicating they attempted to access a non-existent or moved page on Omnara's website”

One user requested a dictation feature in the mobile app for hands-free voice input as a companion to the existing conversational voice agent for coding. This suggests interest in voice as a general input mechanism, not just for agent interaction, potentially broadening the voice interface value proposition.

“User requested dictation feature in the mobile app for hands-free voice input”

Developers need to run agents on their own machine (for codebase and environment familiarity) while staying remotely connected, with seamless offline-to-cloud transition if the local machine disconnects. This hybrid requirement—combining local execution with remote accessibility—represents a fundamental architectural challenge that Omnara is positioned to solve but full implementation details are unclear.

“Current agent tools have a critical gap: local agents only work at the desk, while hosted agents disconnect from familiar codebases and developer setups”

The term 'async agent' is used inconsistently across the industry with dozens of conflicting definitions—ranging from long-running tasks to cloud deployment to event-triggered execution. This semantic confusion risks undermining Omnara's thought leadership positioning, as the blog attempts to establish a more precise definition (agent managing other agents concurrently) but the broader industry remains unaligned.

“Term 'async agents' is used inconsistently across industry—dozens of posts use the phrase with different definitions, appearing in product announcements and architecture discussions without clarity.”

Omnara's pricing structure (Free: 10 sessions/month, Pro: $20/month unlimited) uses the free tier primarily as an acquisition funnel, with Pro designed as the standard commercial offering. Enterprise tier targets team collaboration and compliance requirements, but no revenue metrics are disclosed, making it unclear whether conversion rates and ARPU are sufficient to sustain growth.

“Product offers freemium pricing model with Free ($0), Pro ($20/month), and Enterprise (custom) tiers”

Omnara explicitly disclaims liability for data breaches, hacks, and security incidents, stating no method of transmission or storage is 100% secure. This broad liability waiver combined with collection of sensitive data (conversation history, message content, device information) creates potential regulatory and reputational risk, particularly for enterprise customers.

“No method of internet transmission or electronic storage is 100% secure; Omnara cannot guarantee absolute data security and disclaims liability for breaches beyond reasonable control”

Running multiple agents editing the same codebase simultaneously causes conflicts that real engineering teams avoid through isolation. Current tools (Omnara, Conductor, Boris) solve this with worktrees, directory clones, or containers, but managing multiple concurrent agents increases context-switching burden on users and suggests architectural limits to simple force-multiplier scaling.

“Multiple concurrent agents editing the same codebase on one machine causes conflicts. Real engineering teams isolate work—each person/agent needs their own environment.”

Developers strongly value the ability to maintain coding progress away from their desk, viewing forced desk attachment during long-running operations as a significant friction point. Early adoption data shows 6,000+ users naturally adopting mobile/remote-first workflows for coding tasks, with usage patterns including walking, driving, and gym work—indicating genuine behavioral shift rather than edge case.

“I've always wondered while waiting for AI operations to complete why I'm 'tied' to my machine and can't just shut my laptop while it worked and see what it'd done later.”

Developers report that voice input significantly accelerates ideation and iteration by eliminating the friction of typing complex prompts and architectural explanations. Multiple users emphasize that conversational expression enables faster exploration of directions and maintains coding flow without breaking context.

“Talking is simply quicker than typing. You can articulate more ideas, explore more directions, and iterate without breaking your flow to write long prompts or explanations, this is f'ing cool...”

Run this analysis on your own data

Upload feedback, interviews, or metrics. Get results like these in under 60 seconds.